關於 IPMI 的定義請參考 http://benjr.tw/11240

測試環境為 CentOS 7 x64

在使用 ipmitool 可能會遇到找不到裝置的狀況

[root@localhost ~]# ipmitool Could not open device at /dev/ipmi0 or /dev/ipmi/0 or [root@localhost ~]# systemctl status ipmi

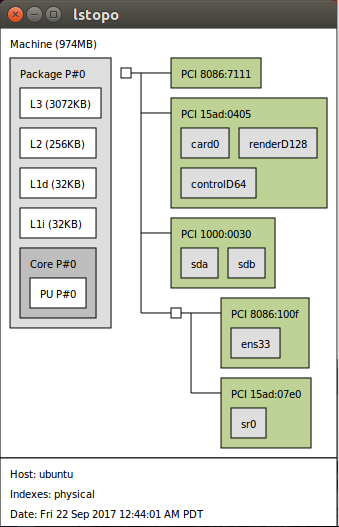

先檢查一下 BMC 裝置是否存在,因為 BMC 非 PCI-E 裝置,所以只能透過 dmesg 來看.

[root@localhost ~]# lspci | grep ipmi

[root@localhost ~]# lspci | grep bmc

[root@localhost ~]# dmesg |grep -i ipmi [ 20.120464] ipmi message handler version 39.2 [ 20.231682] IPMI System Interface driver. [ 20.231712] ipmi_si: probing via SMBIOS [ 20.231714] ipmi_si: SMBIOS: io 0xca8 regsize 1 spacing 1 irq 0 [ 20.231715] ipmi_si: Adding SMBIOS-specified kcs state machine [ 20.231717] ipmi_si: probing via SPMI [ 20.231718] ipmi_si: SPMI: io 0xca8 regsize 1 spacing 1 irq 0 [ 20.231719] ipmi_si: Adding SPMI-specified kcs state machine duplicate interface [ 20.231721] ipmi_si: Trying SMBIOS-specified kcs state machine at i/o address 0xca8, slave address 0x20, irq 0 [ 20.452250] ipmi device interface [ 20.644368] ipmi_si ipmi_si.0: Found new BMC (man_id: 0x00016c, prod_id: 0x4147, dev_id: 0x20) [ 20.644385] ipmi_si ipmi_si.0: IPMI kcs interface initialized

ipmi 相關模組是否正確

[root@localhost ~]# lsmod |grep -i ipmi ipmi_devintf 17572 0 ipmi_si 53582 0 ipmi_msghandler 46608 2 ipmi_devintf,ipmi_si

- ipmi_devintf

Linux character device interface for the message handler. - ipmi_si

An IPMI system interface driver for the message handler. This module supports various IPMI system interfaces such as KCS, BT, SMIC, and even SMBus. - ipmi_msghandler

Incoming and outgoing message handler for IPMI interfaces.

相關套件是否已經安裝

[root@localhost ~]# yum install OpenIPMI ipmitool

ipmi 服務確認有 enable (開機要啟動) , start (立即啟動)

[root@localhost ~]# systemctl enable ipmi

[root@localhost ~]# systemctl start ipmi

[root@localhost ~]# systemctl status ipmi ● ipmi.service - IPMI Driver Loaded: loaded (/usr/lib/systemd/system/ipmi.service; enabled; vendor preset: enabled) Active: active (exited) since Wed 2017-04-19 14:34:36 CST; 38min ago Process: 1190 ExecStart=/usr/libexec/openipmi-helper start (code=exited, status=0/SUCCESS) Main PID: 1190 (code=exited, status=0/SUCCESS) CGroup: /system.slice/ipmi.service

沒有錯誤時 Loaded 為 loaded , Active 為 active .

這時候就可以試試看下面指令,來確認 BMC / IPMI 的狀態.

[root@localhost ~]# ipmitool mc info

Device ID : 32

Device Revision : 3

Firmware Revision : 1.04

IPMI Version : 2.0

Manufacturer ID : 364

Manufacturer Name : Unknown (0x16C)

Product ID : 16711 (0x4147)

Product Name : Unknown (0x4147)

Device Available : yes

Provides Device SDRs : no

Additional Device Support :

Sensor Device

SEL Device

FRU Inventory Device

IPMB Event Receiver

IPMB Event Generator

Bridge

Aux Firmware Rev Info :

0x01

0x00

0x00

0x00